A new system that uses artificial intelligence to track animal movements is poised to aid a wide range of studies, from exploring new drugs that affect behavior to ecological research. The approach, shown in the video above, can be used with laboratory animals such as fruit flies and mice as well as larger animals.

The technology — developed by Mala Murthy(Link is external), professor of neuroscience(Link is external); Joshua Shaevitz(Link is external), professor of physics(Link is external) and the Lewis-Sigler Institute for Integrative Genomics(Link is external); Talmo Pereira, graduate student in neuroscience; and then-undergraduate Diego Aldarondo of the Class of 2018 — accurately detects the location of each body part — legs, head, nose and other points — in millions of frames of video.

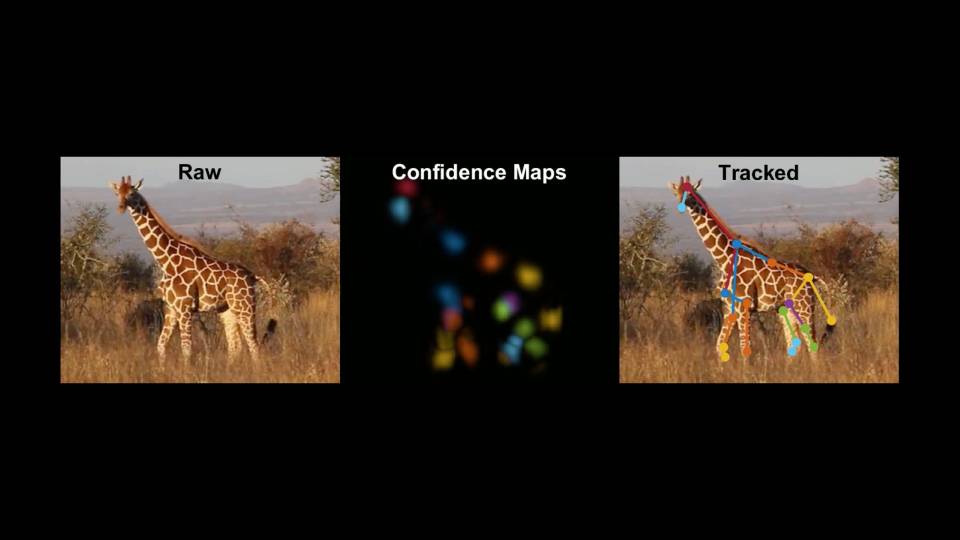

First, a human experimenter records video of a moving animal. Next, the experimenter directs the system’s software to identify a small number of images in which to define body part positions. The system then uses this data set to train a neural network to calculate the location of the points in subsequent frames. The method has recently been extended by Pereira to work not only on videos of a single animal but also on footage of multiple interacting animals, keeping track of animal identities over time.

Funding sources included the National Science Foundation and the National Institutes of Health.

This technology was featured at the annual Celebrate Princeton Innovation(Link is external) (CPI) event in November that highlights the work of faculty and student researchers who are making discoveries and creating inventions with the potential for having broad societal impact. The gathering attracts members of the broader entrepreneurial ecosystem outside the University — such as members of the venture capital community, industry, as well as representatives from state and local governments — who come to learn about the newest University discoveries and meet the faculty and staff engaged in Princeton’s innovation initiative.