Princeton researchers applied machine learning methods to develop an optimal policy for ordering common blood tests in a hospital’s intensive care unit. From left: Computer science graduate student Niranjani Prasad, electrical engineering graduate student Li-Fang Cheng and Associate Professor of Computer Science Barbara Engelhardt.

Doctors in intensive care units face a continual dilemma: Every blood test they order could yield critical information, but also adds costs and risks for patients. To address this challenge, researchers from Princeton University are developing a computational approach to help clinicians more effectively monitor patients’ conditions and make decisions about the best opportunities to order lab tests for specific patients.

Using data from more than 6,000 patients, graduate students Li-Fang Cheng and Niranjani Prasad worked with Associate Professor of Computer Science(Link is external) Barbara Engelhardt(Link is external) to design a system that could both reduce the frequency of tests and improve the timing of critical treatments. The team presented their results(Link downloads document) on Jan. 6 at the Pacific Symposium on Biocomputing in Hawaii.

The analysis focused on four blood tests measuring lactate, creatinine, blood urea nitrogen and white blood cells. These indicators are used to diagnose two dangerous problems for ICU patients: kidney failure or a systemic infection called sepsis.

“Since one of our goals was to think about whether we could reduce the number of lab tests, we started looking at the [blood test] panels that are most ordered,” said Cheng, co-lead author of the study along with Prasad.

The researchers worked with the MIMIC III database, which includes detailed records of 58,000 critical care admissions at Beth Israel Deaconess Medical Center in Boston. For the study, the researchers selected a subset of 6,060 records of adults who stayed in the ICU for between one and 20 days and had measurements for common vital signs and lab tests.

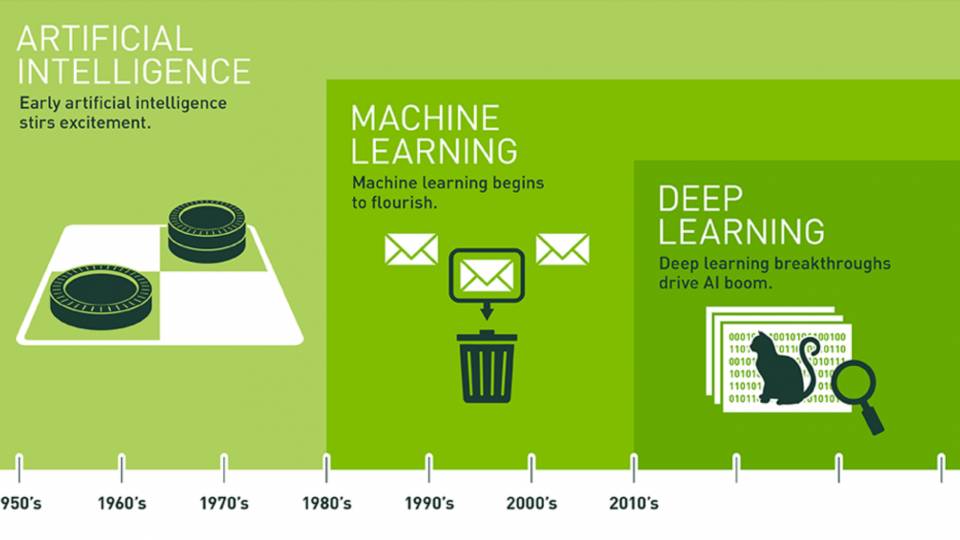

“These medical data, at the scale we’re talking about, basically became available in the last year or two in a way that we can analyze them with machine learning methods,” said Engelhardt, the senior author of the study. “That’s super exciting, and a great opportunity.”

The team’s algorithm uses a “reward function” that encourages a test order based on how informative the test is at a given time. That is, there is greater reward in administering a test if there is a higher probability that a patient’s state is significantly different from the last measurement, and if the test result is likely to suggest a clinical intervention such as initiating antibiotics or assisting breathing through mechanical ventilation. At the same time, the function adds a penalty for the test’s monetary cost and risk to the patient. Prasad noted that, depending on the situation, a clinician could decide to prioritize one of these components over others.

This approach, known as reinforcement learning, aims to recommend decisions that maximize the reward function. This treats the issue of medical testing “like the sequential decision-making problem it is, where you account for all decisions and all the states you’ve seen in the past time period and decide what you should do at a current time to maximize long-term rewards for the patient,” explained Prasad, a graduate student in computer science.

Sorting through this information in a timely manner for a clinical setting requires considerable computing power, said Engelhardt, an associated faculty member of the Princeton Institute for Computational Science and Engineering(Link is external) (PICSciE). Cheng, an electrical engineering(Link is external) graduate student, worked with her co-adviser Kai Li(Link is external), the Paul M. and Marcia R. Wythes Professor in Computer Science, to run the team’s computations using PICSciE resources.

To test the utility of the lab testing policy they developed, the researchers compared the reward function values that would have resulted from applying their policy to the testing regimens that were actually used for the 6,060 patients in the training data set, who were admitted to the ICU between 2001 and 2012. They also compared these values to those that would have resulted from randomized lab testing policies.

For each test and reward component, the policy generated by the machine learning algorithm would have led to improved reward values compared to the actual policies used in the hospital. In most cases the algorithm also outperformed random policies. Lactate testing was a notable exception; this could be explained by the relatively low frequency of lactate test orders, leading to a high degree of variance in the informativeness of the test.

Overall, the researchers’ analysis showed that their optimized policy would have yielded more information than did the actual testing regimen that clinicians followed. Using the algorithm could have reduced the number of lab test orders by as much as 44 percent in the case of white blood cell tests. They also showed that this approach would have helped inform clinicians to intervene sometimes hours sooner when a patient’s condition began to deteriorate.

“With the lab test ordering policy that this method developed, we were able to order labs to determine that the patient’s health had degraded enough to need treatment, on average, four hours before the clinician actually initiated treatment based on clinician ordered labs,” said Engelhardt.

“There is a scarcity of evidence-based guidelines in critical care regarding the appropriate frequency of laboratory measurements,” said Shamim Nemati, an assistant professor of biomedical informatics at Emory University who was not involved in the study. “Data-driven approaches such as the one proposed by Cheng and co-authors, when combined with a deeper insight into clinical workflow, have the potential to reduce charting burden and cost of excessive testing, and improve situational awareness and outcomes.”

Engelhardt’s group is collaborating with data scientists on Penn Medicine’s Predictive Healthcare Team to introduce this policy in the clinic within the next few years. Such efforts aim to “give clinicians the superpowers that other people in other domains are being given,” said Penn Senior Data Scientist Corey Chivers. “Having access to machine learning, artificial intelligence and statistical modeling with large amounts of data” will help clinicians “make better decisions, and ultimately improve patient outcomes,” he added.

“This is one of the first times we’ll be able to take this machine learning approach and actually put it in the ICU, or in an inpatient hospital setting, and advise caregivers in a way that patients aren’t going to be at risk,” said Engelhardt. “That’s really something novel.”

This work was supported by the Helen Shipley Hunt Fund, which supports research aimed at improving human health; and the Eric and Wendy Schmidt Fund for Strategic Innovation, which supports research in artificial intelligence and machine learning.