After several years of planning and more than a year of construction, Princeton University's High-Performance Computing Research Center opened its doors this week. The facility gives researchers on campus new capacity to tackle some of the world's most complex scientific challenges.

Situated on Princeton’s Forrestal campus, the 47,000-square-foot building is the new home to powerful research computers that are capable of generating models of galaxy formation, tracking the motion of a single molecule and simulating the seismic forces of an earthquake, among other highly technical tasks.

Located on Princeton's Forrestal campus, the University's High-Performance Computing Research Center opened its doors this week, serving as the new home to the institution's powerful research computers. The center is seen here from Forrestal Road. (Photo by Christopher Lilja)

The facility, which will come fully online when all systems are operational in January, is the centerpiece of Princeton's innovative plan to provide robust computing resources to all faculty members and researchers. Since Jan. 1 alone, the University's existing high-performance computers located at sites across campus — and which now will be united at the new center — have provided resources for 214 unique researchers from 57 faculty research groups and two undergraduate classes spread among 15 academic departments.

"Princeton's approach is really unique in that it is making these powerful computing resources available to all researchers," said Jeroen Tromp, the Blair Professor of Geology and the director of the Princeton Institute for Computational Science and Engineering (PICSciE), which will oversee the new facility in conjunction with the Office of Information Technology (OIT). "At most universities, researchers work department-by-department or individually to get the computing resources they need."

Today's scientific challenges involve tough problems and data amounts so large, they're measured in petabytes, or quadrillions of bytes. High-performance computing involves the use of supercomputers and computer clusters that can tackle difficult calculations and these large data sets. For example, the computers are enabling scientists at Princeton to model shock waves caused by supernova explosions, explore the feasibility of carbon dioxide storage, and design a cheaper and more efficient fuel cell. Such computers also help researchers understand the complexity of the human brain, how schools of fish decide which way to swim and how flocks decide which way to fly.

Water passes through primary chilled water pumps and piping seen here to cool the air that keeps the computers in the high-performance computing center comfortably at room temperature. (Photo by Christopher Lilja)

About 70 percent of the computing power at the new center is to be dedicated to high-performance research computing, while the other 30 percent runs the email, databases and other computing services needed to support campus. The computers were moved from computing facilities at 87 Prospect Ave., the New South Building, and Lewis Library on the University's main campus.

The high-performance research computers are part of Princeton's existing TIGRESS High-Performance Computing Center and Visualization Laboratory in the Lewis Library. TIGRESS is short for Terascale Infrastructure for Groundbreaking Research in Science and Engineering. While the five computers that make up TIGRESS have been moved to the new building, the TIGRESS staff, educational resources, and the visualization laboratory will remain in the Lewis Library on the main campus.

Two of the five computers are new additions, having been purchased to replace aging models. The two are Hecate, which has replaced an older machine with the same name, and Orbital, which replaces a five-year-old high-performance cluster known as Woodhen. The new Hecate has 1,500 processors, providing roughly 1,000 times the performance of the average desktop, and 12 terabytes of memory, which allows it to analyze unprecedentedly large datasets. In January 2012, when all the systems are installed and running, Princeton's computing center will provide computing speed of about 110 teraflops, or 10 percent of the capability — as measured in "FLOPS," or floating point operations per second — of a national supercomputing center.

Unique approach provides broad access

Princeton University has created a centralized approach to providing computing resources for faculty, researchers and students, as researchers who want to use the high-performance computers do so by making a request through the Research Computing website, which is managed by PICSciE and OIT.

One team that is using the TIGRESS computers is Associate Professor Anatoly Spitkovsky's group in the Department of Astrophysics. The team is exploring how astrophysical shock waves, such as the ones generated by supernova explosions, can cause the acceleration of cosmic rays, which are streams of high-energy particles that flow into our solar system from far out in the galaxy.

Computer models offer a way to track the careening particles inside shock waves, but even simplified models are very computationally demanding, as the simulations involve integrating the orbits of tens of billions of particles.

According to astrophysicist Anatoly Spitkovsky, Princeton's high-performance computing resources allow researchers to "test existing theories of particle acceleration and help to explain a number of puzzling astronomical observations of supernova remnants and gamma-ray bursts." This image shows the simulation of the structure of magnetic turbulence near an astrophysical shock wave, propagating toward the lower right corner of the image. Collisions of clouds of plasma in space cause the shock waves, generate magnetic fields and accelerate particles. Elongated pockets of plasma are created ahead of the shock by newly accelerated particles and are surrounded by filaments of generated magnetic field. (Image courtesy of Anatoly Spitkovsky)

"Princeton's high-performance computing resources have been invaluable to our group," Spitkovsky said. "They allow us to put to the test existing theories of particle acceleration and help to explain a number of puzzling astronomical observations of supernova remnants and gamma-ray bursts. In addition, TIGRESS computers have served as an excellent training ground in high-performance computing for undergraduate and graduate students, and for postdocs."

Through the Research Computing website, researchers can access a list of trainings and mini-courses on how to use the computing resources. Technical support staff are available to help researchers decide which computer system fits the job, troubleshoot malfunctioning programs and tune software for maximum performance. "This lowers the technological barriers," said Curt Hillegas, director of research computing and director of TIGRESS.

Princeton's centralized research computing approach also enables researchers from different disciplines to talk to each other, said James Stone, associate director of PICSciE and a professor of astrophysical sciences with a joint appointment in the program in applied and computational mathematics. "It is like a playground where you meet your neighbors," said Stone, who called the approach "exceptional."

"You find out what they are doing and you learn from each other," he said.

High-performance computers are helping Princeton engineers explore trapping carbon dioxide as a hydrate as one of several possible technologies currently under study for the capture and long-term storage of this greenhouse gas. This image shows a molecular dynamics study of the melting of carbon dioxide clathrate hydrate. Hydrates are crystalline solids in which guest molecules are trapped within polyhedral water cages. Liquid water molecules are shown in blue, solid-like water molecules in red and carbon dioxide molecules in green. (Image courtesy of Sapna Sarupria)

The high-performance computers are helping Princeton engineers explore underground storage of carbon dioxide. Much is unknown about how carbon dioxide behaves when it comes in contact with water and salt at the extreme temperatures and pressures found in some geologic formations. Yet it is difficult to make such measurements in the lab and even more difficult to do so in the field.

Computer simulations can help researchers explore this question, said Pablo Debenedetti, the Class of 1950 Professor in Engineering and Applied Science and vice dean of the School of Engineering and Applied Science. He and his team use Princeton's computing power to perform a type of modeling called a Monte Carlo simulation to look at how molecules of carbon dioxide, water and salt interact with each other at high temperatures and pressures.

"This is the sort of complicated research question that you can ask in a university environment, and it would be impossible without high-performance computers," Debenedetti said.

Meeting researchers' needs on demand

The centralized approach that enables such broad research is successful because it starts with the needs of Princeton researchers, said Jeremiah Ostriker, professor of astrophysical sciences, and PICSciE's director until July 2009. "The researchers and faculty members decide what types of computers they need. For example, some computers are good at visualization while others, a higher throughput, in terms of computations per second."

The savings are also substantial, said Ostriker, who was a leading proponent of the centralized approach when he served as Princeton's provost from 1995 to 2001. Prior to the centralized approach, individual departments were buying and installing research computers in their own buildings.

Installing high-performance computers in individual departments is expensive because the computers consume large amounts of power, generate a lot of heat and require installation of dedicated air conditioners. Often the power supply to campus buildings, especially older ones, was not designed with the kind of heavy-duty electrical wiring and cooling infrastructure needed.

Combining research computing and campus computing made sense, Ostriker said. A central computing facility requires fewer staff, because under the department-by-department system each department had to hire its own computer administrator. The new building is run by three OIT employees, a data center facilities manager and two electronics specialists.

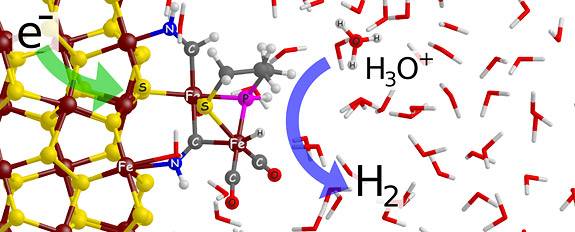

Researchers are using computer modeling to design a catalyst that extracts protons from water and combines them with electrons to produce hydrogen. The catalyst mimics the active site of a naturally occurring enzyme found in bacteria. This image illustrates how hydrogen would be produced by the active site, which features two iron (Fe) molecules (at left) and is stably linked to a pyrite electrode immersed in acidified water (shown at right). (Image courtesy of Annabella Selloni)

This external staff support aids the work being done by such researchers as Professor of Chemistry Annabella Selloni and her group, which is exploring hydrogen generation from water in the search for solutions to world energy needs. The researchers are using computer modeling to design a catalyst that extracts protons from water and combines them with electrons to produce hydrogen. An essential piece of the catalyst is currently based on pricey and rare metals such as platinum. "We are exploring via computer modeling whether a cheaper and more efficient catalyst can be made," Selloni said.

The team's in silico modeling efforts are inspired by a real-life microbial enzyme isolated from hydrogen-producing bacteria. But creating a functional catalyst based on a naturally occurring enzyme is no trivial matter, and requires a basic understanding of the processes involved.

"Our research requires highly sophisticated quantum-mechanical computations," Selloni explained. "These types of calculations can be carried out only on computers like those at Princeton's High-Performance Computing Research Center."

Another of the user groups for the computing facility is the U.S. Department of Energy's Princeton Plasma Physics Lab (PPPL), which is managed by Princeton University. "This computing facility is one of the strengths of being located within the Princeton University neighborhood," said Michael Zarnstorff, deputy director for research at PPPL. "By pulling together we can get a facility that is beyond our ability as individual groups."

In addition to applications in engineering and physics, high-performance computing is being increasingly used in disciplines that until recently required little more than a desktop. In the Department of Ecology and Evolutionary Biology, Assistant Professor Iain Couzin is using computational approaches to understand how complex behaviors such as bird migrations and insect swarms emerge out of actions and interactions among individuals. His team of researchers has developed computational tools to explore how individual organisms, while sensing only their local environments, can adeptly contribute to group behaviors. The researchers have developed a model of how collective behavior traits may have evolved in response to ecological conditions.

Keeping cool with an eye toward sustainability

To support such hefty computing jobs, the new facility at Forrestal was specially designed to provide power to the computers while using as little energy as possible. The entire ground floor of the new building is dedicated to maintaining the computers. One part of the floor is dedicated to electrical switching, another to backup power supplies in the form of large batteries, and another to creating the cool air that will continuously bathe the machines.

Of all the features in the new Forrestal computing research center, air conditioning is among the most important, Hillegas said. "Anyone who has used a laptop knows that computers generate heat. These high-performance computers generate massive amounts of heat." Three massive water chillers, each the size of an ice-cream truck, cool thousands of gallons of water per day to a temperature of about 45 degrees Fahrenheit. The chilled water in turn cools air that is propelled by fans up and into the second-floor computer room to keep the mighty machines comfortably at room temperature.

In case of a power failure, a 100,000 gallon tank of pre-cooled water stands next to the building, ready for use until a backup generator rumbles to life. The water is cool enough to be used without additional cooling, said Edward T. Borer Jr., manager of Princeton University’s energy plant. "We have a tank full of cold water that we can immediately circulate into the data center to start removing heat," he said.

On the second floor stand rows of cabinets that house the computer servers. Each cabinet generates as much heat as 200 continuously burning light bulbs packed into a space the size of a kitchen refrigerator.

To offset these cooling and power needs, the building has many energy-saving features. During winter, the air conditioning system can be switched off, and giant louvers on the south facing wall can be opened to let in cold outside air. This feature takes advantage of modern technical standards that allow computers to be exposed to a greater range of temperatures and humidity levels, said Borer.

Other sustainability measures include cooling towers that enable the chillers to be turned off when the outside temperature is near freezing. A second backup generator, this one gas-powered, has a cogeneration feature that harnesses waste heat as energy to chill the water. It runs on natural gas and has a lower carbon footprint than the electricity provided by the power utility. The natural gas generator will be switched on when electricity prices are high, thus saving money and lowering Princeton’s carbon footprint.

One of the challenges of planning this building was to "right-size" it for current and future research computing needs, the facility's planners said.

"Research computing is not predictable because the technology changes fairly rapidly," said John Ziegler, director of real estate development at Princeton. The solution was to plan the existing building so that someday it can be doubled in size by constructing a mirror image of the existing facility, with the loading dock and freight elevator at the center. "We developed the site with an eye to the future and the expansion capabilities," Ziegler said.

Offices across campus continue to invest in the future of research computing at Princeton. Support for the new computers comes from a variety of sources, including PICSciE, OIT, the engineering school, the Lewis-Sigler Institute for Integrative Genomics, the Princeton Institute for the Science and Technology of Materials and PPPL, as well as a number of academic departments and faculty members. The acquisition of the new computers was made possible through grants provided by the National Science Foundation, the U.S. Department of Energy, the Air Force Office of Scientific Research, and the David and Lucile Packard Foundation.